The Department of Biomedical Data Science’s External Engagement Program supports connections between outside organizations and our faculty, graduate students, and postdoctoral researchers.

We welcome companies, nonprofits, and government entities to join our growing eco-system focused on collaboration to advance precision health by leveraging complex real-world data through development of novel analytical methodologies.

There are many ways to engage with DBDS, as outlined below, including membership in our affiliate programs, sponsored research, student fellowships, diversity initiatives, talent recruitment, and more.

Affiliate Member Benefits:

Education Affiliate Members

Annual membership: $40,000

Benefits: Thought-leadership, research insights, connections with students/faculty for recruiting and potential research partners

Include Annually:

- Networking and Recruiting Forum: with DBDS faculty, students, postdocs

- Symposium on broad research areas: with student posters, faculty talks, panels

- Potential Speaking Opportunities in classes and/or workshops; connect with students informally

- Faculty Liaison to connect with 60+ affiliated DBDS/BMI faculty

- PDF resume book with more than 100 DBDS graduate students

Funding Supports: curriculum development, programs connecting external partners, faculty and students, and student travel to technical conferences.

Center of Excellence Affiliate Members*

The intent is to establish several Centers of Excellence around key research areas in the coming year, including Precision Health with Pharmacogenomics, Multimodal Data Integration, and Computational Biology/AI.

Annual Membership: $275K/year membership in a specific center

Center I: Precision Health with Pharmacogenomics (PGX)

Mission: To develop a nationally recognized center for pharmacogenomics research and best practices in the implementation of clinical pharmacogenomics. The center will include development of tools to leverage the knowledge in the NIH-sponsored PharmGKB (The Pharmacogenomics Knowledgebase; www.pharmgkb.org), create translational tools for clinical report generation, and a mobile application on pharmacogenomics knowledge to be used by both providers and patients.

Benefits: All of the above, plus:

- Regular updates on the progress of scientific developments in pharmacogenomics, and development in innovative software solutions and mobile applications

- Touchpoints with key faculty and other Center members

- Invitations to relevant seminars

- Opportunities for visiting scholars

- Engagement with Center of Excellence leadership regarding ways to leverage and implement pharmacogenomics and the pharmacogenomics knowledgebase; opportunities to collaborate on new tool and knowledge development

- Facilitated engagement with research

- DBDS External Partner Advisory Council

Center II: Multimodal Data Integration

Mission: To transform the way we address acute and life-threatening diseases and chronic health conditions through analysis of new and existing data sources using state-of-the art AI/ML technologies combined with multimodal biomedical data integration. To build an open data and research collaboration that supports research in these areas and has world-wide appeal.

Benefits:

- Facilitated engagement with research

- DBDS External Advisory Council

- Regular updates on the progress of research and translational work conducted by faculty

- Invitations to attend and possibly speak in relevant seminars, workshops, and classes

- Opportunities for visiting scholars

Strategic Partnerships

For additional opportunities to collaborate around sponsored research, or gifts to support student fellowships, diversity initiatives, and other department initiatives, please contact Karen Matthys for more information.

Collaborators

![image293080[68]](https://dbds.stanford.edu/wp-content/uploads/2023/10/image29308068-2-e1697149735282.jpg)

Other Information

All memberships are subject to Stanford University Policies for Industry Affiliates Programs. Please see the Stanford Research Policy Handbook for details. Information on the Stanford University visiting scholar program can be found here.

The Center for Precision Health with Pharmocogenomics will use and develop open-source software, and it is the intention of the Center that any software will be released under an open source model.

* Companies may provide additional funding and all research results arising from the use of the additional funding will be shared with all program members and the general public. A Program Member may request the additional funding be used to support a particular area of program research identified on the program’s website, or the program research of a named faculty member, as long as the faculty is identified on the program website as participating in the Program. In either instance, the director of the Affiliate Program will determine how the additional funding will be used in the program’s research.

Collaboration and Careers Forum

Thank you to everyone who contributed to another successful C&C Forum, including attendees, faculty, panelists and externals partners who participated in the Collaboration Forum.

January 23rd: 8:00AM to 1:30PM

Frances C. Arrillaga Alumni Center

This Forum is designed to engage leaders from companies, government agencies and other organizations external to Stanford with our faculty, graduate students, postdocs and staff scientists. It offers a unique opportunity to discuss advancements in AI, data science and multimodal data integration to accelerate precision healthcare and medicine through a connection with our Department of Biomedical Data Science (DBDS) community.

Agenda:

8:00am-9:00am: Check in and light breakfast

9:00am-9:30am: Overview of DBDS Initiatives and 2024 Plans, from Department Chair, Sylvia Plevritis

9:30am-11:00am: Faculty lightning talks

- Aaron Newman, Professor of Biomedical Data Science Abstract

- Serena Young, Professor of Biomedical Data Science and, by courtesy, of Computer Science and of Electrical Engineering Abstract

- Nima Aghaeepour, Professor of Anesthesiology, Perioperative and Pain Medicine, of Pediatrics and, by courtesy, of Biomedical Data Science Abstract

- Manuel Rivas, Professor of Biomedical Data Science Abstract

- Tina Hernandez-Boussard, Associate Dean of Research; Professor of Medicine (Biomedical Informatics), of Biomedical Data Science, of Surgery and, by courtesy, of Epidemiology and Population Health Abstract

- Akshay Chaudhari, Professor of Radiology and, by courtesy, of Biomedical Data Science Abstract

- Nigam Shah, Chief Data Scientist for Stanford Health Care; Professor of Medicine and of Biomedical Data Science Abstract

11:00-12:00: Collaboration Forum with faculty, students, postdocs, companies and other outside organizations

12:00pm-12:30pm: Lunch

12:30pm-1:30pm: External Partners Panel: The Future of Research in Industry and Academia in the Age of AI, moderated by Bryan Bunning and Kristy Carpenter, PhD students

Panelists:

- Wei Li, Vice President and General Manager of Machine Learning Performance at Intel

- Aicha BenTaieb, Principal AI/ML Scientist at Genentech

- Jeffrey Venstrom, Chief Medical Officer at GRAIL

- Anitha Kannan, Founding Member/Head of Machine Learning at Curai

- Scott Penberthy, Managing Director, Applied AI, Office of the CTO at Google

View panelist bios here.

Contact dbds_departmentevents@stanford.edu for more information.

Collaboration and Careers Forum Archive

Generative AI Workshop Overview

The Department of Biomedical Data Science held a special Generative AI in Healthcare and Medicine Innovation Workshop on Tuesday May 30th for a packed room of graduate students and faculty across the School of Medicine, Graduate School of Business, Engineering and H&S, along with outside partners from industry. The goals were to bring together interdisciplinary teams and perspectives to ideate on how generative AI can potentially help solve significant real-world healthcare challenges, and to broaden understanding of responsible AI and potential unintended consequences. The d.School design-thinking framework was introduced to the participants – many who had never experienced such a brainstorming session previously.

The workshop had over 180 registrants and started with a standing-room only panel discussion from senior leaders in industry and academia. The panel was moderated by Professor Sylvia Plevritis, Chair of the Dept. of Biomedical Data Science (DBDS) at Stanford, and notable panelists included:

- Eric Horvitz (alumnus of the Stanford Biomedical Data Science program class of 1990), Chief Scientific Officer of Microsoft and member of President Biden’s Council on Science and Technology

- Jia Li, Co-Teacher of Stanford Generative AI in Medicine course spring quarter, and Co-Founder of HealthUnity

- Lori Sherer, Partner at Bain & Company

- Professor Nigam Shah, Chief Data Scientist at Stanford Healthcare, and faculty in the Department of Biomedical Data Science

The panel focused on solutions and challenges with generative AI in healthcare, and a few key themes emerged:

Promising Opportunities: There are exciting opportunities for generative AI to address critical areas of healthcare and medicine, such as to hasten drug discovery, reduce hospital operational workloads, and to provide more accessible health services to those who often don’t have coverage. Horvitz shared that he is most excited about the applications with protein structures & interactions, such as for new drug discovery. “There are new methods in generative AI, including methods that employ ‘diffusion modeling’ to help design new proteins, such as new vaccines and therapeutics,” he commented. “There are also applications of large-scale language models in the biosciences, that could do synthesis across the wide bioscience literature to do such tasks as help to identify candidates for drug repurposing by reasoning about pathways that link medications, protein expression, and illness.”

ly help solve significant real-world healthcare challenges, and to broaden understanding of responsible AI and potential unintended consequences. The d.School design-thinking framework was introduced to the participants – many who had never experienced such a brainstorming session previously.

With regards to Generative AI in clinical applications, Professor Nigam Shah commented that we tend to get excited about the generative capabilities of language models. He posited that the real value comes not from ability to generate; but rather from the internal representations that these models learn. How does a patient become a vector of 256 numbers, and what else can you do with the vector? Professor Shah’s research with Microsoft on generative AI in clinical settings is testing the bounds of ChatGPT capabilities: https://hai.stanford.edu/news/how-well-do-large-language-models-support-clinician-information-needs. Lori Sherer from Bain gave a few statistics: “Overall rate of misdiagnosis in the US is about 20%, leading to roughly 10% of patient deaths. And 30% of patients don’t follow recommended treatment plans.” Generative AI solutions could potentially reduce error rates and encourage positive healthy behaviors among patients.

There are already some early impressive success cases in applying generative AI to healthcare and medicine, such as DBDS Professor James Zou’s lab that is involved in Generative AI for novel antibiotics, including the first one that’s experimentally validated. (paper in review). However, the panelists agreed that most of the early wins with generative AI in the field will likely come from lower risk applications. Sherer explained that back-office applications are the target with her healthcare and life sciences clients, and Horvitz added that it’s already happening today with large corporations in other industries, including finance.

Unintended Consequences:

Jia Li stressed that responsible AI and inclusiveness are very serious concerns. For example: “The accessibility is quite important for people from rural areas of India, Africa and other countries. The use of generative AI may not be effective unless the knowledge is understandable for these users.” Lori Sherer shared her perspective in consulting, saying “all of our clients very concerned about biases. People are moving ahead with rigor and caution, but they are not waiting for government oversight.” Shah added that “it’s the inappropriate sharing of data that may lead to biases later on.” He also highlighted the confusion about privacy versus security, saying “HIPAA is not about privacy; it is a misunderstood law. It requires you to send patient info to another provider if needed for care delivery.” With regards to security, Nigam posed: “No one wants your data accessible to 3rd parties. What does it mean to have security? There isn’t a framework right now. What is the risk of de-identification?”

Horvitz shared the analogy of the pilot/co-pilot situation, saying: “we seek designs and mechanisms for human-AI interaction that put the human in the ‘pilot’s seat’ and where AI plays the role of ‘co-pilot’ versus the other way around.” Regarding end-to end-security, Horvitz mentioned that “enterprise-grade cloud computing solutions” now provide organizations with private instances of the foundation models “so that data is not shared—and also enable the use of use of cryptographic methods that allow for HIPAA-compliant communication to and from the AI models, so that sensitive medical data is protected.”

Future Perspective: Many improvements to generative AI are coming and new opportunities lie ahead. On the technology side, Horvitz shared that “very shortly – we’ll see multimodal capabilities of ChatGPT4 – with vision and language together.” Nigam spoke about digital twin technology that could allow specific drugs recommendations for each patient and that have the potential to accelerate biological discovery. With generative AI creating synthetic data feeding a digital twin model, this could be a significant step forward in precision health. Li commented: “We have a lot of silo’d data in healthcare. How can we leverage such data so that gen AI models can gain meaning out of it, and we can personalize the outcome for patients?” She added that Gen AI can leverage multimodal data; but can also generate synthetic data so that privacy can be protected in the studies. “The field is moving so fast and the potential of gen AI in healthcare is still yet to be seen,” she said.

Advice for Students: Lori Sherer stressed that there are massive opportunities for students with generative AI at every layer of technology development and implementation – from models to applications to change management in healthcare. Horvitz commented: “If I were in school, I’d be going back to neurobiology.” He shared his continued fascination with the brain and advised student to stay flexible and gain skills collaborating with others on projects, reflecting the interdisciplinary nature of this field. Shah added that students need to “understand incentives on a project. Who pays who, how often and for what? If you really want to understand healthcare, you need to know that and not just basic biology.” And Jia Li advised students to “pursue what you’re most excited about, and keep on learning about the latest advancements. Interdisciplinary knowledge will help you go a long way.”

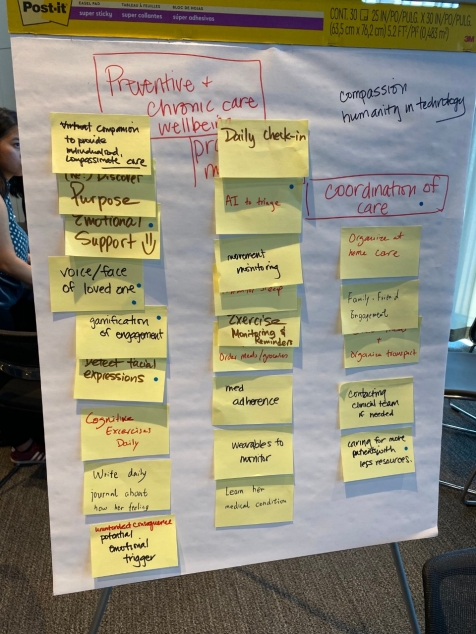

Following the panel, the audience broke into small table groups to ideate around particular problem statements in healthcare and medicine that could potentially be improved with generative AI. These ranged from challenges in life sciences and drug discovery to individual care delivery to healthcare systems.

The table brainstorming session was led by senior leaders internal and external to Stanford, including Sylvia Plevritis, Faculty and Chair of the Department of Biomedical Data Science, Scott Penberthy, Managing Director of Applied AI at Google, Matt Lungren, Chief Medical Information Officer at Microsoft, Lori Sherer, Partner at Bain & Company, Jia Li, Co-Teacher of Stanford Generative AI in Medicine course spring quarter and Co-Founder of HealthUnity, George Savage, Managing Director of Spring Ridge Ventures, and Peter Ro, Medical Director of Advances in Primary Care Palo Alto Medical Foundation.

The energy in the room was extremely high throughout the entire session and many groups were standing huddled around their flip charts, adding ideas on post-it notes and then clustering the brainstorming.

Here are several examples of the ideation session brainstorming outcome:

I. Problem Statement: Predicting recurrence of breast cancer: how likely is a woman to have a recurrence of estrogen receptor (ER) positive breast cancer? Can we use generative AI to explore other warning signs by continuously monitoring the medical record EHR?

Concrete Example: Sally just got diagnosed with ER positive and HER2 negative breast cancer, the most common type (70% of breast cancer). She has a risk of recurrence that is constant in the next 15 years. What can she do to reduce her risk and reduce her stress level?

Key Insights: The discussion started with focus on biomarkers and clinical trials; however, the main focus of the brainstorming ended up around solutions to reduce stress and anxiety for women in this situation. For the woman who knows she has the same risk for 15 years, the table brainstormed on how to manage the stress level and provide some peace of mind. If there is real-time monitoring in a background system, then the woman may not have to worry as much. Perhaps the stress could be lowered if the woman knows that the burden is on someone else’s shoulders to monitor the situation for them. This system could generate the risk indications and alert their doctors as needed.

2. Problem Statement: How do we audit large language models for bias and safety? And how do we make LLMs more trustworthy in medical and healthcare applications?

Key Insights: The main key takeaways come from data quality, verification, and transparency, which are three important pillars to understand and evaluate safety and bias concerns in large language models. In particular:

1. We should create better model cards. Model cards should provide organized information about how the model was trained, which data was used, and how it was evaluated. This can help both users and practitioners understand the model’s background and any potential biases involved.

2. Then, we need to evaluate datasets themselves more effectively and develop a quality index to gain a better understanding of the data we are using. This, of course, involves considering factors like how well the data represents different perspectives and whether any biases are present.

3. Finally, we should carry out continuous testing and verification even after the model is deployed. Many times, issues and biases are discovered along the way, and by regularly checking the models, we can identify and address these issues.

3. Problem Statement: Hallucinations and more efficient clinical paperwork/communications: How do we combine genAI, vector databases, information retrieval to limit hallucination in patient-facing systems, e.g., explaining care & benefits, understanding a diagnosis, preparing a clinical note?

Concrete example: The busy oncologist needs to summarize the 60 patients she saw today, writing “authorization approvals” for insurance companies after dinner.

Brainstorming Outcome: The team felt that putting the clinician in charge, and focusing the work on a patient-by-patient basis, was best. The AI would draft, and clinician would check the work. Techniques like rag and react can help reduce dreaming. One key idea to support doctors was an Intuit-style interface like TurboTax. This would still need to be approved by the hospitals for HIPAA etc. The clinician-first, simple & intuitive interface, and analogy to the IRS and banks was really compelling.

4. Problem Statement: Clinical Workflow: How can we successfully implement generative AI solutions into the clinical workflow, overcoming issues with past expert systems?

Concrete Example: Dr. Jones is an experienced internal medicine physician. He has seen many health IT products come and go. Most of all he has faith in his training and experience having managed thousands of patients over 30 years. Why should he trust a tool that tells him what to do clinically?

Key Insights: The brainstorming identified two top potential application areas: (a) Medical notes/voice to text, and (b) Managing at the population/cohort level. The biggest “aha’s” in the discussion were:

- Need to automate data capture in the workflow

- Change the doctors’ perceptions that the models are a black box: Involve doctors in the process (if it’s a homegrown solution). Let them see the models, how they were produced and validated, etc.

- Pushing insights into workflow at right time is important – when will the insights be most valued?

5. Problem Statement: Healthier Diets: How can we leverage generative AI to help people with chronic diseases to have healthier diets?

Concrete Example: Sophie is a patient with Diabetes Type II, who is struggling with her daily diet. She tried to search online and got an overwhelming amount of do’s and don’ts. Some of the recommended food seems very healthy but she doesn’t like the taste. Also there are significant amount of medical terminology that she couldn’t understand.

Key Insights: The goal is to enable “DietGPT – Your Personal Dietitian” to make healthy and enjoyable diet recommendations. It is critical to solve several components/challenges below in order to build trustworthy application: (1) not achieving the goal (healthy but not enjoyable, or vice versa), (2) Bias/equity, (3) Edgecase/risk, (4) Evaluation, (5) Liability and (6) Behavior change/adherence. The team discussed strategies to mitigate the problems such as having humans in the loop, positioning it as education, assistant role etc.

6. Problem Statement: Healthcare Resource Optimization: How can Generative AI be employed to optimize healthcare resource allocation, including staff scheduling, patient prioritization, and supply chain management?

Concrete Example: Dr. Susan, a family physician, runs a small clinic in a suburban neighborhood. She has a dedicated team of medical coders and billers who handle the administrative side of her practice. The medical coders in Susan’s clinic are responsible for translating patient diagnoses, treatments, and procedures into universally recognized medical codes. However, due to the complexity and variability of medical notes, as well as the vast number of codes in systems like the International Classification of Diseases (ICD) and the Current Procedural Terminology (CPT), errors often occur. These errors can lead to claims being denied by insurance companies, resulting in lost revenue for the clinic.

Key Insights:

1. Start Small and Scale Up: Begin by identifying repetitive tasks or frequent codes as the initial target for piloting the use of generative AI. This approach can help identify any issues early on, limiting their potential impact and making it easier to correct them.

2. Ensure Seamless Integration with Existing Systems: Engage with EHR vendors or specialized IT consultants to ensure proper integration after pilot testing.

3. Provide Adequate Training: Both the technical and medical staff need adequate training to understand how to use the AI, troubleshoot problems, and interpret the AI’s outputs. The aim should be to develop a collaborative human-AI workforce, where each plays to their strengths.

4. Ensure Data Security and Compliance: An AI system’s data security measures should be rigorously tested before it’s implemented.

5. Establish Clear Lines of Responsibility: Ensure there are clear lines of responsibility among staff for managing the AI system, responding to any discrepancies or errors, and maintaining a high standard of care.

6. Engage Stakeholders Early: From physicians to coders and billers, everyone who will be affected by the AI system should be involved in the process early on. Their input can help identify potential issues before they become problems, and their buy-in can ensure smoother implementation.

7. Problem Statement: Payer/Insurer Challenge: How could generative AI help to improve communications and relationships with patients? For example, how could it help to decipher explanation-of-benefits notices and laboratory test results for readers unfamiliar with the language used in these communications?

Concrete Example: John is a patient who went to the wrong MRI imaging center (referred by his doctor) and the reimbursement was denied because it was not in-network. He now has a $2500 bill for a test that the insurance company is refusing to pay. John has a terrible impression of the payer and there have been many calls to sort it out. The provider is also concerned that they will not get paid.

Key Insights: The upshot on the accepting vs. the resisting was the following:

- Providers and patients would be accepting of solutions to solve this problem; but payers benefit from the confusion and as much as they want better patient/customer relationships, fixing this would result in more claims being paid and that would hurt their bottom line.

- As for the solution itself we thought there could be passive listening (GenAi in Nuance or something) that listens to the dialog between patient and physician during the appointment and if it seems like the physician is going to order an MRI or even if the dialog makes it seem like that would be required the system automatically looks up the patient’s coverages and then recommends the top 3-4 places the patient should be referred to and send a text message to the patient’s phone with the descriptions of the options and google maps and scheduling functionality so the appointment with an “approved” MRI provider can be made while sitting there in the office

- We talked about the provider databases being very bad (ever Payer’s website and provider look ups are terrible) so we also brainstormed that the information for the Payer: Provider coverage could actually be an advertising supported database – The providers would pay to be listed and they would be required to include which plans they support so that the info returned to the patient would be accurate and so the providers have a way to market to patients directly and at the point of care

- We talked about GenAI upstream from the provider visit if a symptom checker could predict the need for an MRI and order that automatically, and get it approved before the appointment so once the patient actually got into the providers’ office the MRI output would already be available to the provider to read and then recommend course of treatment … seemed like a waste of provider time to have them do a 15 minute appointment to order the MRI

- We also talked about downstream if there were mistakes in the provider database and the patient still went to the wrong provider and who would pay? We didn’t have a great answer for that but wanted to recognize that the look up might not always be accurate.

Other table group write-ups TBD (do not include at this point):

Problem Statement: Genome Sequencing: How can we use generative AI to synthesize new genomic sequences that either have similar properties to real human sequences, but because they are not real, can be shared without compromising patient privacy, or that have specific properties and could be used to insert new traits into, for example crops?

Key Insights: A process emerges: Data generation and inference; Representations; Synthetic Data; Privacy; Security. [need to elaborate]

—-

Problem Statement: To prevent cognitive decline in people with markers for Alzheimers, how can we come up w/ a healthcare system to support healthy behavior, using generative AI?

Persona Vignette: Patty’s mother lives in a memory-oriented assisted living facility and requires daily care. All of Patty’s aunts have experienced similar cognitive issues, beginning in their 60s. What should Patty do to assess her risk and delay the onset of cognitive decline?

Key Insights: Grouping around 3 areas: (a) Characterize cognitive decline (b) Manage data properly and (c) Make it acceptable to patients & families. Any ideas particularly interesting?

——

Problem Statement: There is a growing population of older patients with complex medical conditions – how can generative AI help care for patients outside of the traditional visit model?

Persona Vignette: Maria is a 72-year-old woman with history of hypertension, arthritis, and diabetes who lives by herself. She has difficulty with ambulation and does not have many friends or family in the area who can help drive her to appointments.

Key Insights: Non-intrusive monitoring system.

—Karen Matthys, DBDS Executive Director

How to Give Directly to DBDS?

Are you interested in supporting DBDS graduate programs, compute infrastructure, and/or DBDS faculty and trainee research?

We hope you will take advantage of this opportunity to directly support faculty working on the frontlines of current biomedical data science research. We would be truly grateful to have your support for our teaching and research missions.

To give directly to Biomedical Data Science, go to the online giving page. Under “Direct your gift” change “Med Fund” in the second menu to “Other Stanford Designation” and type “Department of Biomedical Data Science” in the “Other” field. You can choose the type of gift and the amount, and either log in to your Stanford account or continue as a guest to finish your donation.

Interested in exploring a partnership with DBDS?

Intrested in a partnership with the Department of Biomedical Data Science (DBDS)? Please visit out External Engagement Program page.